Multi-Threading is a big topic that is often shunned for its rumored “difficulty”. In many ways it is a new process of thinking if you’re used to the traditional style of non multi-threaded coding, but I can make the case that it is more natural to us as our brains are naturally multi-core and multi-threaded!

This article will begin with the classical view on multi-threading. It is basically the conversion of single threaded designed programs to multi-threaded ones.

- Ensuring Mutual Exclusion

- Avoiding Deadlocks

Of course there is more to the topic, but under each implementation, the latter two are at the core of it. Let’s get started!

Introduction: Please skip to next section if you know about Processes and Thread fundamentals.

All current machines execute work broken down into discrete units called threads. Now, of course when you launch a program, it needs to be transformed from passive to active state. That’s when a program is summoned into a process. Normally, the process would have only one thread of execution and that would be the process itself. All well and good for problems that can be solved serially, but for most daily uses, concurrency is needed!

Concurrency doesn’t have to be constrained to the problem domain. For example, a process can call the main thread to execute the program and have a second one monitor something else. Examples: game + chat client, text editing + spell checker + battery monitoring etc.

So apart from problems that require a multithreaded solution, even serially solved ones can benefit from multithreaded implementations.

The Pillars:

Mutual Exclusion:

A golden rule that entails that: given threads 1…n, only one of those threads can be executing a piece of code (usually small and has to deal with memory access, known as the Critical Section) at any given time.

This code may or may not be the same, but it does read/write to some shared resource amongst the other threads, hence making it a “critical section”.

Deadlocks:

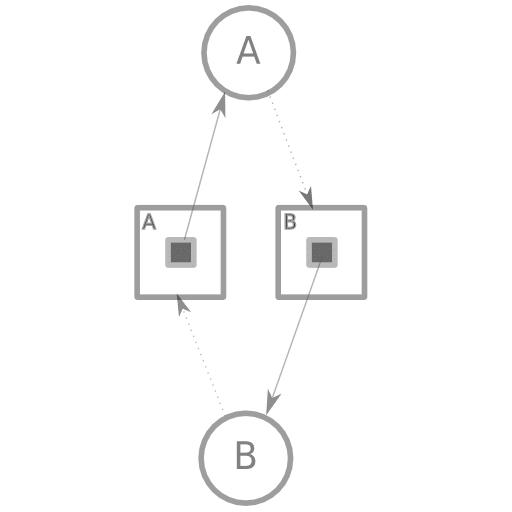

Given thread A and B: thread A is waiting on a resource that thread B has locked and similarly, thread B is waiting on a resource that thread A has locked.

// Resources Available: One of A and one of B.

void Thread1() {

lock( A );

lock( B );

// Dead lock due to resource B taken by Thread2

}

void Thread2() {

lock( B );

lock( A );

// Dead lock due to resource A taken by Thread1

}

As seen above, both Thread1 and Thread2 will be in a deadlock (wait forever), as their resource needs are interleaved! It is important to mention that there is only one of each resource A and B; the deadlock wouldn’t occur if there were two of each for example and of course only one of Thread1 and Thread2.

Circles: Processes. Squares: Resources. Dotted Arrows: Request for resource. Solid Arrows: Resource’s current owner.

Next multithreaded article will jump to experimental uncharted territories. Preview: will be designing almost lock free datastructures. The threads will be so well synced and orchestrated that lock/unlocking will be almost inexistant!